We're About to Lose the Apprenticeship Layer

Why AI should be a trainer, not an executioner of junior talent.

Something uncomfortable is happening.

On one side, founders and execs are whispering (perhaps loudly): “Do we still need junior people?”

On the other side, universities are still graduating them like it’s 2012. (From 2012-2025, the US population grew ~9%; undergraduate degrees issued grew ~17% in that same time.)

In the middle, there’s AI.

Not as a vague force or sci-fi villain. But as a very practical tool that is already reshaping how work gets done.

I want to argue something specific (before we all run for the hills): AI might be the best training tool we’ve ever had.

Not because it’s magical or will save us from job losses (jobs will be lost, let’s not be naive).

But AI can compress the time it takes to go from “new” to “useful.” If we don’t intentionally use it that way, the alternative is obvious: We delete the bottom rung of the career ladder.

The Hidden Cost of “New”

Every organization has the same problem.

New people are expensive, because they’re new. Onboarding uses precious resources. Getting a new person to a competent level is a real investment.

Often you see newbies miss invisible steps, struggle to understand what “good” looks like, make preventable mistakes, interrupt senior people constantly and require constant feedback.

Most companies “solve” this with:

Documentation nobody reads

A shadowing process that depends on one generous senior

Or worse, vibes (not to be confused with vibe coding, which I’m a fan of!)

Training is rarely designed. It just sort of…happens.

AI changes that equation. It’s already being proven.

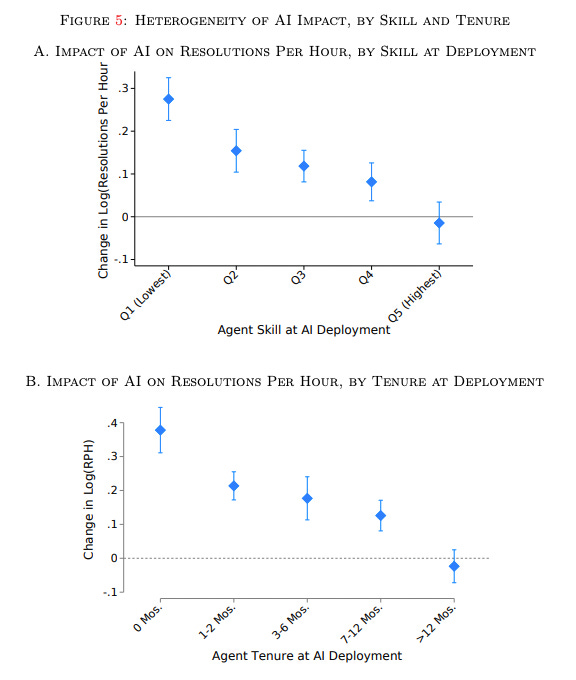

In an MIT & Stanford joint research study, Generative AI at Work, they tested the use of AI in a few circumstances with junior and senior people. One example focused on customer support, providing people with AI tools to help them resolve issues. The lowest skilled and newest human agents (which now requires specifying in a world of AI agents)) to the job, saw the biggest lift in resolutions per hour thanks to AI.

The study found that novice and low-skilled workers saw a 34% improvement, whereas highly experienced and skilled workers saw minimal improvement.

Let me give you three other examples that I’ve experienced over the last few months.

1. The Hackathon for Developers

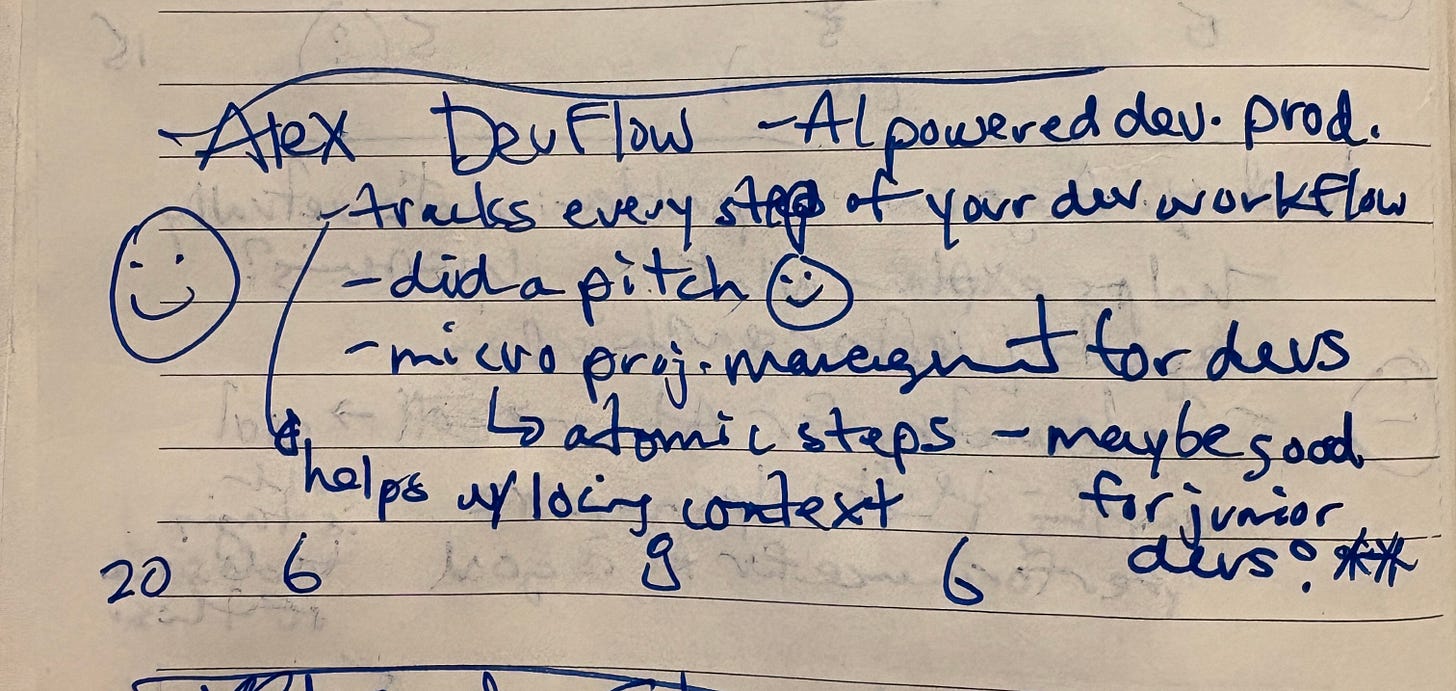

At a recent hackathon hosted at Highline Hub, Alexander Zidros built something called DevFlow. He described it as an always-on task tracker for software developers, helping track the tiny steps between “in progress” and “done.”

During his presentation he said:

This could be valuable for new developers that may not appreciate all the tiny things that have to be done properly to go from idea to production grade code.

I lchuckled because I had written that exact thought in my notes before he said it.

New developers don’t struggle because they lack intelligence. They struggle because the work has hidden structure.

Senior engineers know:

Edge cases to check

Deployment nuances

Testing expectations

Naming conventions

Performance implications

What “done” actually means in production

Juniors see the feature(s). Seniors see the system.

While AI is doing a lot of the coding, developers are valuable orchestrators, and junior + orchestration = very risky, unless properly trained.

2. The Professional Services Signal

In recent conversations with leaders inside a professional services firm, they told me about internal prototypes designed to review work and provide feedback.

The goal was obvious:

Maintain quality

Speed up delivery

Help new employees internalize standards faster

What struck me wasn’t automation. It was reinforcement.

AI reviewing deliverables against company-specific best practices, industry norms, style guidelines and regulatory requirements isn’t “replace the analyst”, it’s “train the analyst in real time.”

Maybe the most powerful use of AI in knowledge work isn’t generation, it’s review. And review is how standards transfer.

3. Personal Experience Exploring Insurance Opportunities

Some time ago, Highline Beta was working with a partner to explore new venture opportunities in insurance, specifically around smaller agencies and brokers. We learned something interesting: they’re often held back by their ability to onboard new brokers quickly.

Why?

Because most training happens by osmosis.

New brokers:

Sit in on calls

Watch senior brokers negotiate

Absorb patterns

Learn what not to say the hard way

Almost none of that tribal knowledge is formally documented.

When we floated the idea of AI-assisted onboarding, the interest was strong. But there was an immediate constraint: “It can’t just be generic best practices.”

Each firm wanted to infuse the AI with their own expertise around qualifying clients, structuring renewals, handling edge cases, managing risk, building trust and more.

That means extraction. You might think, “How different can one agency’s onboarding be compared to another?” Tough to say, but the perception is there, and perception is reality.

So AI can play a role as:

Knowledge capture engine

Pattern recognizer

Internal playbook enforcer

It’s not about replacing brokers, it’s about accelerating them.

The Fork in the Road

Here’s where it gets uncomfortable.

There are two obvious ways companies can deploy AI.

Path 1: Replace juniors

We’re already seeing signs of this.

A Stanford Digital Economy Lab paper using ADP data found early-career workers in AI-exposed occupations are experiencing disproportionate employment pressure. Entry-level roles appear especially vulnerable.

SignalFire’s 2025 State of Tech Talent report shows new grads now make up a much smaller share of hires compared to pre-pandemic levels.

Dario Amodei, CEO of Anthropic, has warned that AI could eliminate a significant portion of entry-level white-collar jobs in the next few years.

Even if the precise numbers shift, the direction is clear.

The bottom rung is wobbling.

Path 2: Compress time-to-competency

Instead of eliminating juniors, you could:

Reduce preventable mistakes

Make expectations explicit

Provide instant feedback

Turn months of ramp-up into weeks

This is the version I’m arguing for.

Not because it’s guaranteed.

Because it’s intentional.

What Matt Shumer Got Right (And Why It Freaked Me Out)

Matt Shumer wrote a piece titled “Something Big Is Happening.” It’s a wake-up call. Most of you will have read it by now.

He highlights the pace of AI progress, including models contributing to their own development cycles. AI is building itself. Come on now! The feedback loops are accelerating. The capability curve is steep.

His tone isn’t calm. It’s not “incremental change.” He’s saying, “this is moving faster than most people realize.”

I know this isn’t a fad. The technology works, it improves predictably, and the richest institutions in history are committing trillions to it.

I know the next two to five years are going to be disorienting in ways most people aren’t prepared for. This is already happening in my world. It’s coming to yours.

I know the people who will come out of this best are the ones who start engaging now — not with fear, but with curiosity and a sense of urgency.

And I know that you deserve to hear this from someone who cares about you, not from a headline six months from now when it’s too late to get ahead of it.

We’re past the point where this is an interesting dinner conversation about the future. The future is already here. It just hasn’t knocked on your door yet.

It’s about to."

He’s right.

Reading it scared the hell out of me.

Not because I think we’re doomed tomorrow.

But because I have two adult kids in university.

And I found myself thinking: What does the job market look like when they graduate What does “entry-level” even mean? Who trains them if nobody hires them?

That’s the structural risk.

If AI replaces junior roles, we don’t just lose jobs.

We lose the apprenticeship layer that produces future experts.

Societies run on apprenticeship, whether formal or informal.

Remove it, and five years later you wonder why nobody knows how to make hard decisions.

Schools are Stuck

High schools and universities are not built for exponential tooling shifts.

They optimize for standardized evaluation, information recall, controlled environments and syllabi that don’t change mid-semester (or ever).

I know there are many people at schools trying to move the needle, but it’s borderline impossible.

The real world now optimizes for ambiguity, tool fluency, self-directed learning and continuous adaptation. You’ll be hard-pressed to find that in school.

Most students are not being trained to:

Work alongside AI

Validate outputs

Build judgment in AI-augmented workflows

Understand where AI fails

Hack

Build things. Anything…just build something…please…

And many companies are not helping. I get the concerns around privacy, security and compliance, those are real issues. But “ban it” is not a strategy. It’s procrastination. Frankly, the question is whether or not big companies can even remotely keep up, because the pace of change is faster than ever before.

What AI Training Actually Looks Like

If we’re serious about AI as a training accelerant, it needs structure.

Here’s the practical model.

1. Make “good” explicit

Use rubrics. Define quality thresholds. Codify “definition of done.”

If a junior can’t articulate what great looks like, they cannot self-correct.

AI can enforce and reinforce that definition constantly.

2. Embed feedback inside work

Training is not a course. It’s a loop.

Do work → get feedback → revise → repeat. Dare I say, “build, measure, learn”?

AI should sit inside the workflow, not in a separate portal nobody opens.

3. Capture tribal knowledge intentionally

Interview senior staff. Extract patterns from past work. Turn recurring advice into structured guidance.

Treat knowledge like product. Because it is.

4. Simulate edge cases

Judgment forms under pressure.

AI can simulate difficult clients, objections, compliance traps, unexpected constraints and more.

It’s safer to fail in simulation than in production.

The Cultural Risk

If AI becomes “the boss,” this fails.

If AI becomes a reviewer that replaces mentorship, a surveillance tool, or a blunt cost-cutting instrument, people will resent it.

AI should amplify senior staff, not erase them. It should reduce repetitive review load, not eliminate human judgment.

Humans still need to:

Own standards

Approve exceptions

Model judgment

AI can’t inherit responsibility. It can only inform it.

So Where Does That Leave Us?

I’m worried (but not panicked, despite the hilarious Chris Farley image).

I’m worried about the next generation.

I’m worried about universities moving slowly.

I’m worried about companies waiting too long to experiment or truly change how they work.

But I’m not ready to declare doom.

People still hire people for accountability, trust, nuanced judgement and relationship building.

Those things have not vanished. What has changed is the baseline.

The future belongs to:

People who understand how to use AI

People who know its limits

People who get their hands dirty

People who don’t wait for permission

Matt Shumer is right: the acceleration is real, but the worst response is denial. The second worst response is passive fear.

The best response is intentional deployment.

If we design AI systems that delete the bottom rung, then we break the pipeline.

If we design AI systems to compress time-to-competency, we strengthen it.

That’s a choice.

If I’m thinking about my kids, your kids, and the next generation of founders and operators, I’d much rather fight for the second path, because it feels more sustainable than the alternative, where we all just sit around doing our hobbies because we’re unemployable.

The world may be changing faster than we’re comfortable with, but we still get to decide how we build within it. And right now, the most leverage might not be in replacing newbies. It might be in training them better than we ever have before.

Alternatively, let the newbies burn and everyone goes into the trades or becomes a founder (I realize that took a quick turn!) Robots are well behind AI, so the trades are safer, and I’ll always bet on entrepreneurs over AI to create net new value. We need more founders. ❤️️

Well said. I would add that hiring 20-somethings who have already normalized using AI in their daily lives can be a huge benefit to organizations, as they will be much more adaptive and receptive to integrating it into their workflows -- and can teach us old folks how it's done.

Watching how my teenaged son leverages AI to create custom study quizzes for him tailored to the subject areas he's weakest in really opened my eyes to how much young people already figured out how to turn AI into a personalized teacher. This is easily translatable to the workplace, and I love having my own AI usage coaches living under my own roof.