There's Nothing Wrong with MVPs

The definition for MVP has been mangled almost beyond recognition. But I still think the concept works beautifully. (#43)

MVP = Minimum Viable Product

The term has been around for over a decade. Over time its definition has shifted all over the place, to the point where we’ve lost the plot.

There are now so many variations or alternatives that it’s impossible to know what’s going on. And I’ll admit, I’ve ignored most of these options because in my mind the term MVP makes perfect sense.

Everyone hates MVPs. 😢

Ash Maurya, author of Running Lean (and other books), recently said, “I’m abandoning the MVP.” (btw, Ash’s books & content are awesome, you should check it out.)

I’ve seen numerous blog posts and Twitter threads suggesting that people abandon the concept completely.

For your reading pleasure, here are people saying MVPs should die. They make some good points, but I find myself overwhelmed with the complexity of the alternatives.

I’ve abandoned “MVP” - by Richard Mironov

Product has a language problem. And we need to fix it. - by Saeed Khan

Instead of MVPs, Maybe We Should Be Releasing SMURFS - by Matthew Barcomb

SMURFS? Seriously? 🤭

Here’s a tweet I responded to lamenting the demise of MVP, but others pile on to suggest that the concept should be eliminated:

MVPs are out.

What exactly did they do?

Nothing, but people have misinterpreted and misused the term and concept to the point that they’ve (a) come up with alternatives; and/or (b) abandoned the term/concept entirely.

I’m going to say it: I love MVPs. 😍

There have been multiple definitions over time and that is confusing. Words matter. How we define things, matters. I get that. But I’ve looked at many of the alternatives proposed, and they’re often as confusing, if not more.

So I’m sharing how I define MVP and what I think it takes to build one properly. I hope it helps!

What is an MVP?

An MVP is the smallest version of a product needed to prove that you’ve solved a user’s or customer’s problem.

Now let’s look at how we get there…

1. Everything is anchored on validating the problem

If you don’t understand the problem intimately, it’s tough to build a good MVP.

You validate the problem through user research and gaining insights into users’ needs. The user research you do will likely include interviewing people, observing them, and building stimulus & prototypes to test specific hypotheses.

I’ve written about these things before, and you can go through the process as follows:

Step 1: How Do You Know You’re Solving a Problem that Matters?

Step 2: How to Apply the Scientific Method to Startups Without Being a Zealot

Step 3: Test First, Build Later: A Guide to Validating Your Ideas With Stimulus & Prototypes

The key thing to recognize is that you’re not building an MVP yet. Stimulus & prototypes are not MVPs, they’re quick, throwaway things you build to test a risky assumption. For example, many startups will build landing pages to test value propositions and early conversion. That makes complete sense. But a landing page is not an MVP, because it can’t solve the user’s problem.

At some point, the term RAT emerged, for Riskiest Assumption Test.

RAT was originally put forward by Rik Ingram as an alternative to MVP. Ingram argued, “Instead of building an MVP, identify your riskiest assumption and test it. Replacing your MVP with a RAT will save you a lot of pain.”

I’m a big believer in validating your riskiest assumptions first, and doing it without building an MVP. That’s why you interview & observe users and then run tests using stimulus and prototypes (which all end up “in the garbage” once you’ve used them for the purpose of learning).

Assumption Tracker Tool:

This is a free Assumption Tracker Tool (Google Sheet) you can use to help figure out how to assess, prioritize and track assumptions (and the experiments you run to validate them.)

Once you’ve built your MVP, you’re still testing your riskiest assumptions—that doesn’t change, which is why RAT doesn’t replace MVP.

For most, the riskiest assumption is around the magnitude of the problem. Is the problem painful enough for an identifiable group of people? Ideally you validate this (with a high degree of confidence) before building your MVP.

In Design Thinking parlance this is called Desirability. In JTBD, you’d say, “I need to prove there’s a job to be done, which isn’t being properly addressed today.”

It’s possible your riskiest assumption is around Feasibility or Viability (again, using Design Thinking terms), in which case you have to validate these things before building the MVP.

Desirability: Does anyone actually care? You’re looking for an unmet need, the job to be done, the pain that people are experiencing.

Feasibility: Can it be built? This might mean there are technical challenges. Or there could be legal, regulatory, or compliance related issues.

Viability: Can we make money? This is not referring to the “Viable” in MVP (I get the confusion). Viability in this context is about the business model, market size and overall financial potential of the idea/business.

If you have enough confidence that you’ve validated a problem for an identifiable user/customer group, you move ahead.

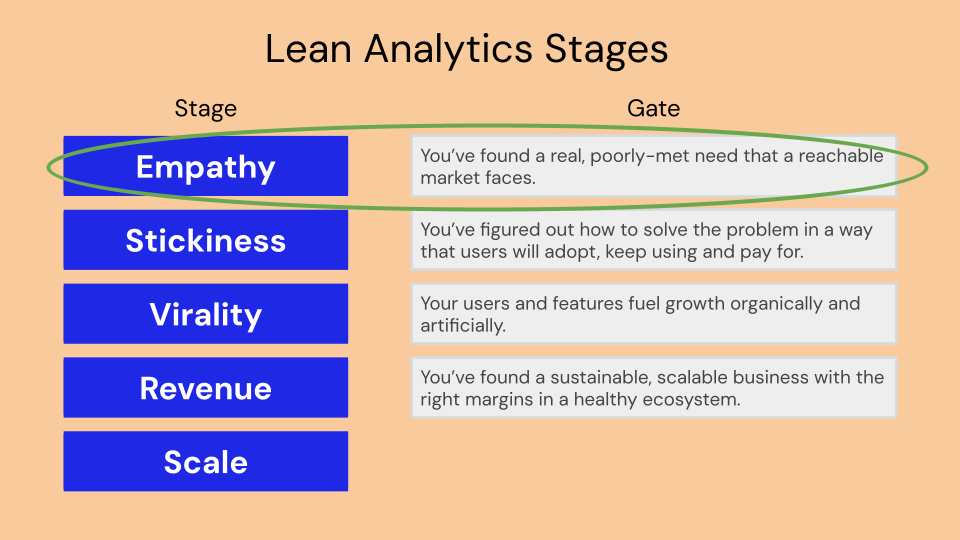

In Lean Analytics, this is about moving from the Empathy stage to the Stickiness stage. You’ve found a real, poorly-met need that a reachable market faces, now it’s time to build a solution and see if it helps.

2. Define how you’ll prove you’ve solved the problem

What has to be true for you to know that you’ve solved the problem you validated?

Before building anything, figure this out.

Many founders skip this step because they’re so eager to build. But without knowing the definition of “winning” you run the risk of building the wrong thing (including building too much) with no way of knowing.

“Winning” at this stage = Usage (or Stickiness).

Can you build a sticky product? If so, it’s reasonable to assume it’s creating value.

How you define “sticky” is up to you. I typically start by thinking about Daily Active User (DAU), Weekly Active User (WAU) or Monthly Active User (MAU). Is your solution something you expect people to use daily, weekly or monthly?

Instagram and Slack are good examples of daily use apps

A grocery store app is a good weekly use case (a lot of people buy groceries weekly, not daily or monthly).

Xero, Quickbooks or bookkeeping apps are good monthly use examples. You may use them more frequently, but there’s heavier use at the end of a month.

Some apps have daily and monthly use cases. If that’s the situation you’re in, you need to know that up-front. Expensify is something that employees use daily or weekly to file expenses, but a bookkeeper or accountant may only reconcile monthly.

Once you’ve defined the frequency of usage, figure out what people will be doing in your product. How will they use your product to have their problem solved?

Note: You can test some of this before building anything. You can build a clickable prototype to simulate usage and walk potential users/customers through the experience. You can run a co-creation workshop with users/customers to get more information on what features they’d be interested in. People aren’t great at designing solutions to their own problems, but they can still give you insights, and provide you with a peek into their world.

I like to ask, “What is at the core of my product? What is the atomic unit of engagement that will create value?”

Read more on how to define the core or heart of your product.

Nir Eyal’s Hooked model is a great way to think about this:

Here are a few questions you can ask to define what the product will do at its core to solve a user’s problem and create value:

What do you want users to do in your product that drives small but valuable engagement loops, either for them or for others? How quickly can users do what you want them to do? And are you sure those engagement loops are valuable? (Read this post by Ben Williams on engagement.)

How easy is it for users to get value from your product? And how quickly do they get that value? Could you make this easier and/or speed it up?

Why would someone use your product in the first place? Is the value proposition clear enough to motivate people to try?

What are users doing before they use your product? If you understand this, you may be able to find the right triggers to engage them, and get them to the heart of your product faster.

What are users doing after they use your product? This can help you understand ways of transitioning people between your product and the next step in their journey. In the future you may expand into those use cases.

Does the business model (or intended business model) drive more engagement with the core of the product? If people are using your product as intended, and benefitting from the core of it, is that sufficient to allow you to monetize? Usage should drive value to users and to you. If you know what your business model will be, you can figure out if the usage you’re getting from users/customers will drive the business model or not. For example, Tik Tok has an advertising business model, which is driven by consuming as much attention as possible. Everything Tik Tok does is to keep the eyeballs.

Before building, you need to define:

How the product will be used (i.e. what people will do). What’s at the core?

How often the product will be used (i.e. frequency of usage). Beyond DAU/MAU/WAU, you need a sense of how often specific features will be used and in what order. You need to understand the customer journey through your product and the “behaviour loops” you’re trying to create.

Write this stuff down. You can use a Product Requirements Document (PRD) to help think through the components. Here are 3 examples:

Kevin Yien’s PRD template (more complex, but really great to dig into)

We now have the user/customer problem, a defined scope for the smallest version of the product, and a “line in the sand” on how we’ll prove it. Technically we’ve defined all parts of MVP.

Here’s the definition again: An MVP is the smallest version of a product needed to prove that you’ve solved a user’s or customer’s problem.

Next: Let’s build this thing and see what happens!

3. Building & Launching the MVP

To prove that you’ve solved the problem in a meaningful way you need to get through the Stickiness stage:

Let’s break that down:

“Users will adopt” — We’ve defined our expected usage for the product, so we can measure this.

“Keep using” — There has to be consistency in usage otherwise you haven’t solved the problem. You’ll experience churn, but if it’s too high, something is wrong. How you define “keep using” is tricky. But generally, you’ll need months of data to get comfortable that you’ve got the level of engagement you need.

“Pay for” — People taking out their credit cards is a great sign. But this isn’t the only way people “pay for things.” They also pay with their attention and data.

All three of these represent “Viable” in “Minimum Viable Product.”

A lot of the struggles people have with the term MVP is the “V” for viable. I get it. What does viable actually mean?

Andrew Chen suggested we go with Minimum Desirable Product because “viable” is often too focused on the business aspects of things. Maybe, but as I’ve suggested, viable means that people use it (and don’t abandon), and there’s an exchange of value (i.e. you give them the product, they are willing to give you money, attention and/or data in return).

Getting through the Stickiness stage is similar to Problem-Solution Fit.

Problem-Solution Fit is another term that’s been defined in numerous ways, confusing people, but here’s my take:

You’ve validated a problem and built a solution that fixes it. You know the solution fixes the problem because you have a set of customers that use the product, tell you they get value, pay for it and churn is low. You haven’t jumped from early adopters to later adopters, and your next step is figuring out if you can scale customer acquisition.

Note: The “solution” in this definition is the MVP.

To know if you’ve got the right MVP you have to prove value. You do that quantitatively by measuring usage (based on how you’ve defined it) and qualitatively by talking to your users/customers.

An MVP has to be an actual product. It can’t be an experiment designed to learn without providing value. That doesn’t mean an MVP isn’t an experiment designed to learn (it is) but it has to be something people can use. And it can’t be so crappy that its chance of delivering value is really small.

The term is MVP not MSP (which stands for “Minimum Shitty Product”—thankfully no one has suggested we shift to it!)

If you think you can launch crappy products to learn quickly, think again. No one will use your crappy product. No usage, no learning. (Except for learning that what you built sucked.)

Jason Cohen suggests abandoning MVP and replacing it with SLC (Simple, Lovable, Complete). I like SLC, it’s very close to how I think of MVPs, although “lovable” feels like a stretch. I use lots of products that I like and enjoy, but don’t love. I use WhatsApp, but do I love it? Shrugs. It’s good enough for what I need. I use Facebook Marketplace to sell stuff, but I don’t love it. Simple is tricky to define as well. Some things require levels of complexity that might not fit into the SLC definition, but could fit into the MVP one (the minimum you have to build to prove value may be “a lot.”)

I understand the frustration with the term MVP. Some will argue that it can be a landing page, ad test or something else that’s not an actual product driving value for users. That definition of MVP is wrong. But it’s pervasive enough that it’s confused the heck out of things.

My definition of MVP works for me. So I’ll keep using it. It won’t be for everyone and that’s OK (although I realize that doesn’t solve any confusion!)

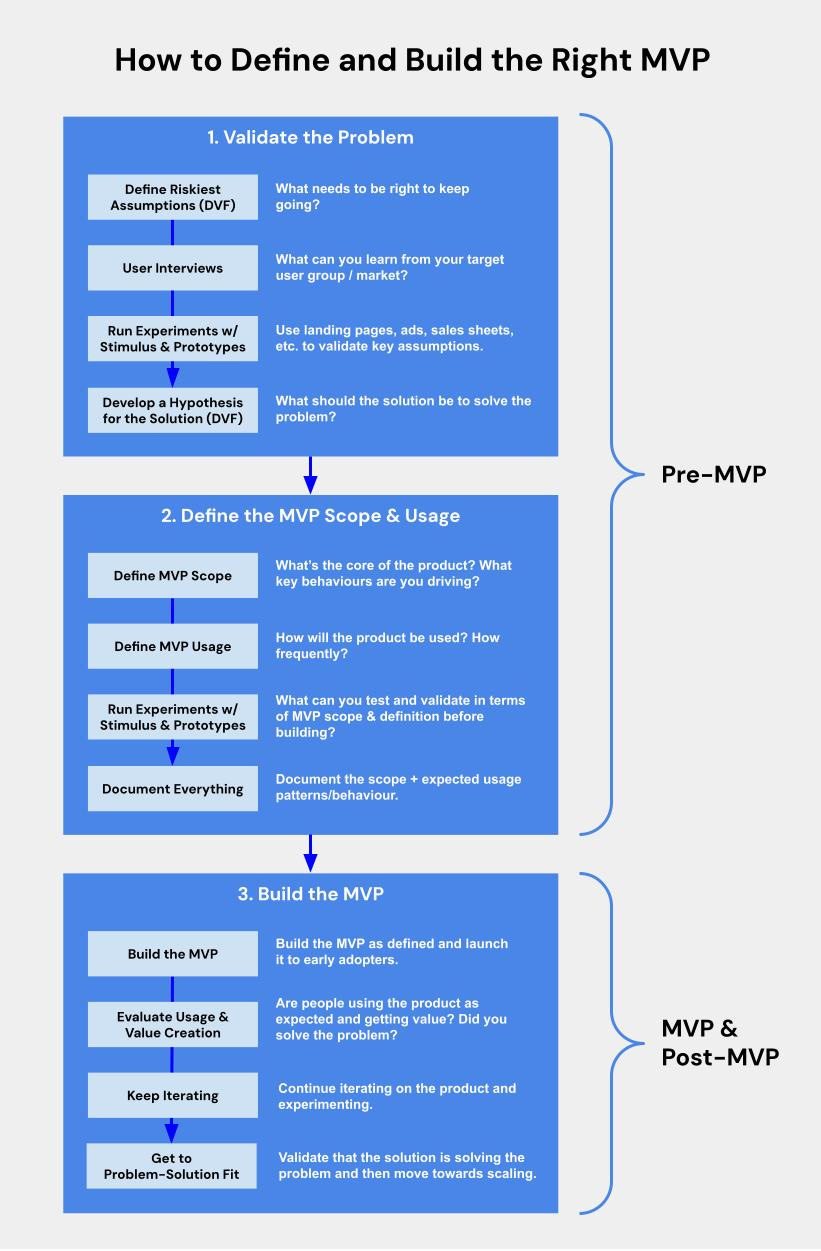

I’ve put together a visual summary / framework for how to think about, define and build an MVP. Hopefully you find it helpful!