Beyond the Hype: Building the Real AI Economy

The industry isn’t crashing; it’s maturing. Now comes the hard part: making the business case for AI through strategy, scalable value creation and security.

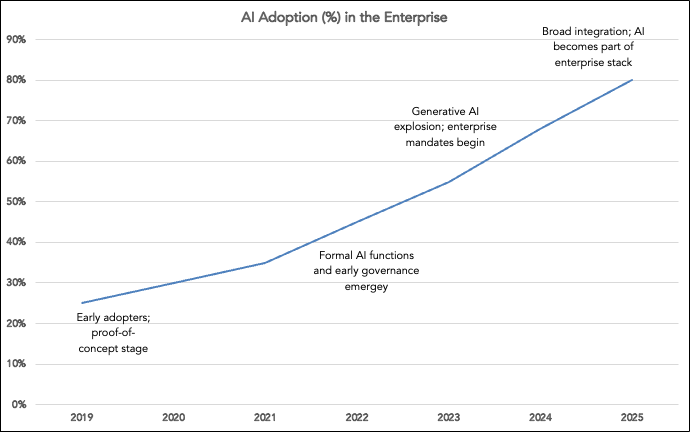

Everyone’s debating whether we’re in an AI bubble. We’re not.

Valuations are crazy and the market is absurdly frothy. But AI isn’t going anywhere. It’s already too integrated into everything we do, even in enterprise (which is typically slow to adopt). When this hype cycle corrects, AI will still be everywhere.

I lived through the dot-com bubble. I was running a small digital agency building websites, web applications and e-commerce platforms. Everyone wanted to “get on the Web.” Then it stopped. Incidentally, it also cost hundreds of thousands of dollars to build an online store… 😝

Back then, people genuinely thought the Internet (and Web) might have been a fad. That’s not happening this time.

We’re early and being forced to adapt at an uncomfortably quick pace.

That’s why you get:

CEOs demanding everyone uses AI, even without a strategic plan.

Thousands of startups trying to automate every workflow possible, often without understanding the industries they’re entering.

Billions of dollars invested by VCs and others, hoping to catch lightning in a bottle.

Every startup claiming they’re an AI startup (since no one really knows what that means).

Trace Cohen writes a strong piece on this (better than I could): This Is Not 1999: Stop Calling It a Bubble.

AI is Already Winning in the Enterprise

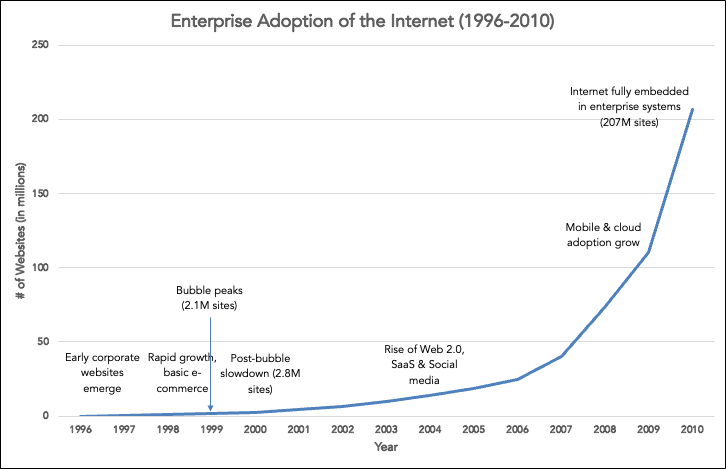

The biggest difference between 1999 and today: enterprise adoption.

In 1999, no Fortune 500 CEO was telling their entire company, “Use the Internet or you’re fired.” Today, every CEO is shouting, “Use AI now!” They might not completely know what that means, but they’re serious.

AI is already embedding into big companies’ operations: customer support, procurement, finance, legal, HR, etc. The adoption is uneven and often shallow, but the commitment is real.

That makes this cycle fundamentally different. We’re not debating whether the technology matters. Everyone agrees AI matters. The question is how to use it intelligently.

When the dot-com bubble burst, public markets were packed with retail investors chasing nonsense IPOs. For many, it was a “get rich quick scheme.” Today, venture capital is funding the mania (VCs fuelled the dot-com boom too, but liquidity was achieved through going public way too quickly). Many VCs will lose a lot of money this time around, but it’s a pittance compared to the public markets.

If every AI startup suddenly went public, we’d be in bubble popping territory, because a lot of those startups are propped up with tons of capital and unsustainable growth expectations.

We’ll see a correction, not a collapse.

Enterprise AI Adoption is Causing Chaos

A friend recently went through vendor onboarding with a major enterprise. For the first time, he saw AI-specific security questions:

What’s your policy on using AI tools?

Have you trained employees on safe AI practices?

Do you have cyber insurance that covers AI-related incidents?

They still cared about the usual things — where data is stored, encryption, access controls, etc. — but now they’re explicitly calling out AI use as part of their risk profile.

The issue isn’t just where data lives anymore. It’s how data might be exposed or processed through AI tools.

That’s the new risk lens. Once company data touches an AI system, it’s perceived as being “out there.” Whether that’s technically true or not doesn’t matter. Perception equals risk.

For decades, enterprises asked, “Where is my data stored?” Now they’re asking, “What AI systems does my data touch?”

This shift is creating massive uncertainty. Most vendors don’t know how to answer these questions, and most enterprises don’t know how to evaluate the answers.

Meanwhile, nearly every SaaS tool has added AI features. You might not even realize that a tool you’ve used for years is now sending your company data through OpenAI, Anthropic, Mistral, or others. When your corporate IT team asks, “Are we using AI?” you may say no…and be wrong.

My bet: Startups will differentiate on the “secure use of AI”. SOC2 used to feel like a necessary evil/annoyance for startups selling into the enterprise. That’s changing. While SOC2 is technology-agnostic, auditors are already asking AI-specific questions. Expect this scrutiny to grow fast.

And recently I was talking to a startup that updated their Terms of Service to account for how it uses AI. They’d launched a new AI-driven feature and realized customers needed clarity on how their data was being handled. Sounds simple, but it’s not. Legal teams are scrambling to figure out what “responsible AI usage” language looks like.

BTW, their customers aren’t big enterprise either, but everyone (across all business sizes and sophistication levels) is twitchy about this stuff.

Executives are scrambling to get their companies using AI, and everyone, including IT, security, procurement, etc. is catching up. The lack of a coherent AI strategy makes this especially painful.

Most big companies don’t have an AI strategy. They’re experimenting everywhere without clear goals or alignment. One team is building copilots. Another is using ChatGPT for reporting. Another is banning it entirely. The result is chaos disguised as progress.

If you’re looking to develop an AI strategy and bridge the gap to execution, check out WorkLearn Labs.

WorkLearn Labs is a portfolio company launching soon. They’re focused on helping people define the right AI projects, assess ROI and connect the dots between strategy and delivery.

The next enterprise wave won’t just be about using AI. It’ll be about governing it.

Expect this over the next couple years:

AI training becomes mandatory. Like annual phishing and security training, employees will need “safe AI usage” certifications. Startups may eschew heavy-handed security training, but I suspect AI training will find its way to them as well.

Formal AI policies become table stakes. Companies will define what can and can’t go into AI workflows, when to disclose usage, how to vet vendors and models. The Australian government just released an AI policy template. This will be messy.

AI audit software emerges. New tools will track which LLMs are in use, what data is shared, how internal apps route data, whether prompts are logged, etc. Maybe these tools already exist (just like there are tools for tracking the software you use). Companies will demand visibility into AI usage within their organizations and with their vendors.

AI is Changing “Build vs. Buy” But SaaS Isn’t Dying

AI tools for software development are amazing. It’s amazing what you can build quickly. And the tools are only getting better.

I’m a big fan of Lovable. I built a corporate venture studio design tool in 2 days.

Recently, at Highline Beta, we started building a new “Contacts tool”. The goal is to aggregate our collective contacts (primarily from LinkedIn), enhance the data (using AI) and make those contacts available to our team and portfolio. Instead of a portfolio CEO pinging us and asking, “Do you know any Series A investors that have recently invested in fintech and are at the earliest stages of their fund?” they can use the tool.

We could have used a CRM instead, but:

We wanted to experiment with building something on our own (honestly it’s just fun!) Internal experimentation is a valid reason to build vs. buy, especially if you’ve mandated your employees to “use AI”. Just remember that experiments doin’t always work.

It gives us the opportunity to tailor the software to our precise needs. This is one of the most common reasons for build vs. buy, you get exactly what you want. Of course, every time you want to add something, you have to build it.

It should be less expensive than a monthly subscription to a CRM, although I don’t know what it’ll cost (more on that in a moment). The SaaS license fee model is evolving. For the Contacts tool we built, we would need 30-40+ licenses (which would be expensive in a CRM). Using Lovable, we don’t pay per user, we pay for use.

Still, I’m not convinced companies are going to replace a ton of software they buy with software they build.

There’s a lot of discussion about AI agents replacing traditional SaaS software. I can see that happening, but AI agents are still software. Whether you buy a platform for agent-building or specifically-focused agents for specific jobs, you’re still buying technology (software). AI agents are literally software as a service (SaaS), but re-packaged and priced differently. Lovable is a SaaS tool.

Building a lot of software in-house (versus buy) has implications:

You have to maintain the software. Users will want more features (and since you told them it only took two days to build the whole thing, they’ll be clamoring for more!) They’ll report bugs and want support. You now have to manage all of that (you can’t outsource it to a vendor).

You’re probably building the software side-of-desk. Some companies will have teams tasked with building internal tools (which is where most AI software is replacing “buy” decisions). Those teams are supercharged by AI dev tools; it makes their jobs way easier. But for the rest of us, we’re developing software while doing our day jobs. If building software with AI is a side hustle, there’s risk.

If the people that built the software—even if it was vibe coded—leave, you lose institutional knowledge. We’re going to see hundreds of thousands of orphaned apps within companies that no one knows what to do with. When bugs emerge, or someone wants to extend the software (or integrate it securely into other systems), watch out.

You have little idea how much the software will cost to operate. You may not be paying a subscription fee, but you’re paying for credit usage and that’s tough to measure and plan for.

With the two tools we’ve recently deployed at Highline Beta using Lovable, I have no clue what they’ll cost to run. I barely know what they cost to build, because I wasn’t tracking our time doing it, and I wasn’t tracking credit usage. A couple of times Lovable told me that we were at our credit limit (or fast approaching it) and I should top-up. I did. It wasn’t a lot of money, but I have no clue how long the top-ups will last.

Tracking and predicting future credit use isn’t clear. A lot of AI tools are hiding the numbers in an effort to obfuscate credit use; they don’t really want you constantly tracking it. They want you to use as many credits as possible and get hooked, so they can sell you more. It’s long distance calling and phone minutes all over again.

I also have very little insight into Lovable’s security. It makes security recommendations, which is helpful, but I don’t know how good a job it does designing secure software. We can’t assume people prompting a tool to build software applications know anything about security! When everyone within an organization can rip out vetted SaaS products with un-vetted, “randomly generated” vibe coded products, it’s a security nightmare.

Companies will build tools using AI instead of buying software. When traditional SaaS renewals come up, companies might ask if they can build a replacement for less. But it’s not going to shift the “build vs. buy” decisioning exclusively to build. Build comes with a lot of overhead and headaches that can be worth paying others to deal with. Division of labor is a thing.

Here are some further discussions on these topics:

AI coding tools upend the ‘buy versus build’ software equation and threaten the SaaS business model

I Found 12 People Who Ditched Their Expensive Software for AI-built Tools

Is SaaS Dying for Good? A Look at How AI is Reshaping the Future of Software

Build vs. Buy in the Age of AI: Why Smart Companies Choose to Purchase Non-Core Software

So Where Are We Headed?

We’re in a messy discovery phase that will last several years.

The combination of enterprise mandates, undisciplined startup building, and opaque infrastructure costs won’t cause a collapse. It’ll trigger a cleanup—a period of AI rationalization where companies focus on what actually works.

Here’s what I expect:

AI compliance becomes a big industry. Training programs, certifications, audits and firms dedicated to AI governance will proliferate. Big companies buy-in first, followed quickly by startups.

Transparency becomes a selling point. Startups that clearly explain their AI stack will stand out. Startups will need to address serious security policies earlier than they’re used to.

Hybrid SaaS models explode. Software becomes both product and platform for AI workflows. This is already happening, and will continue.

Big companies build a bunch and then buy. Experimentation isn’t the exclusive purview of startups. Big companies will experiment a ton to replace their complex and expensive software stack, overdo it and then settle back into purchasing SaaS + AI tooling (with a heightened nervousness around how their data touches integrated AI feature sets).

Unbundling always leads to re-bundling. We’ve seen this pattern for decades: big companies buy a pile of point solutions, then realize they need “one app to rule them all.” Right now we’re in an unbundling phase driven by the explosion of AI tools and proliferation of startups. But that’ll shift. As major enterprise platforms bake AI deeper into their products, large companies will consolidate again, bundling for simplicity, security and procurement sanity.

Procurement becomes the bottleneck. Enterprise customers (and smaller customers) tighten up their requirements and expectations. If your AI story doesn’t pass the test on security and clarity, you don’t make the shortlist.

AI isn’t going away. It’s already built into how companies operate and how people work.

The real challenge now isn’t belief, it’s competence. Most organizations are still figuring out what good AI adoption looks like. They’ll need to learn how to use it responsibly, measure its value, and build the right guardrails as they go. Startups will have to adapt, even faster than before, because demands on them in terms of how they use AI securely will increase.

This isn’t about hype cycles anymore. It’s about execution.

Regarding the topic of the article, I found your distinction about enterprise adoption particulary insightful. It's easy to get caught up in the bubble talk.