Will AI Automate Everything?

In a rush to build AI tools, founders are forgetting who should be at the centre of everything: humans. (#69)

AI is taking over. Almost every startup I speak to is building a copilot (for something) and/or AI-driven automation. Founders are looking for any and all tasks that seem slow and pitching AI as the answer.

Does this make sense?

Yes and no.

Logically, speeding up tedious, repetitive tasks makes sense. Why wouldn’t people want to save time?

AI is going to be better at a lot (most?) things in the next 2-5+ years. Who doesn’t want to optimize their lives and work?

AI will connect dots and create new solutions to problems faster than humans. Who doesn’t want better, faster, cheaper answers?

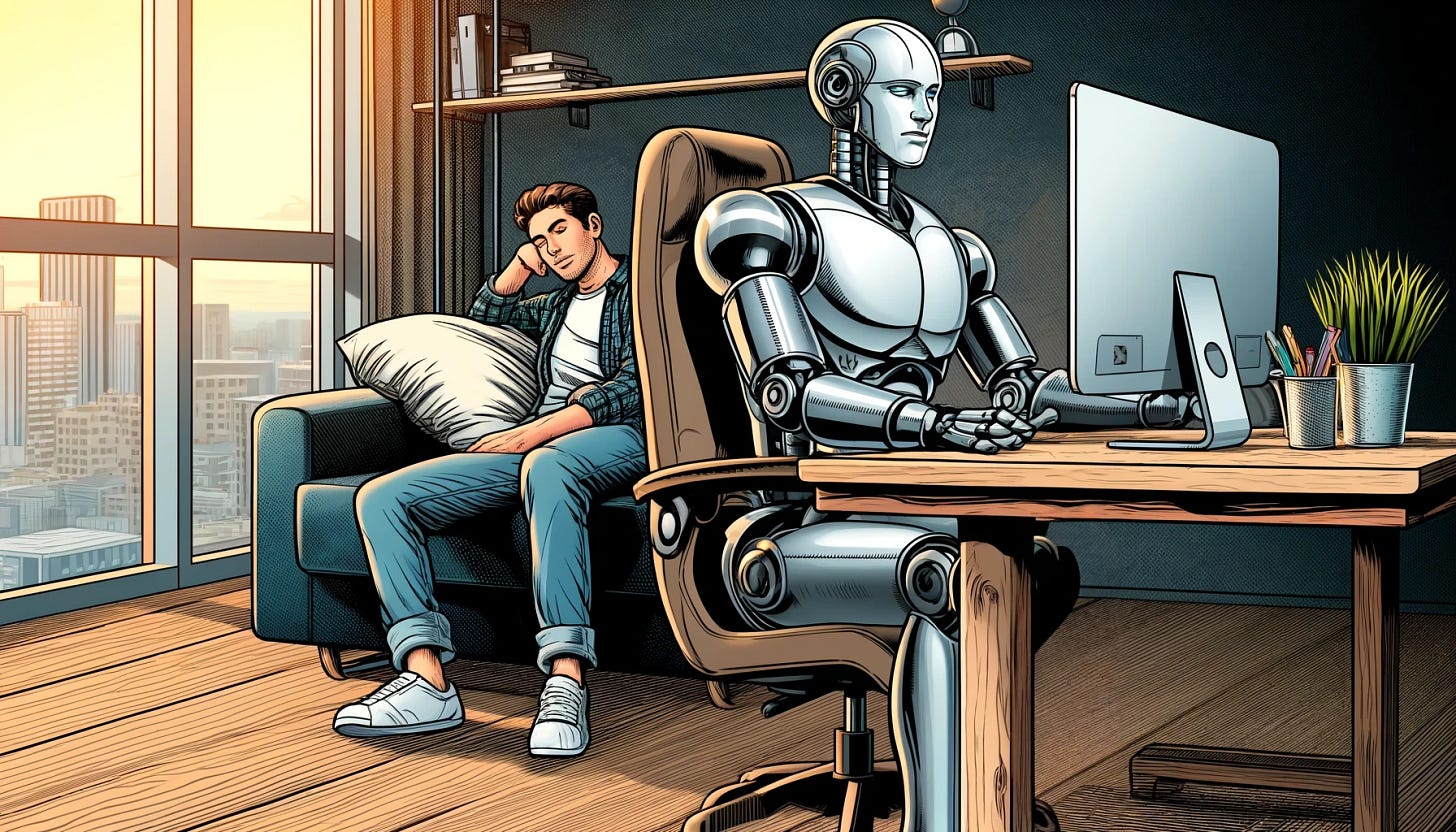

Sign me up. Automate everything and I’ll take a nap.

If only it were that simple.

A lot of founders use this logic, but fail to realize one key thing: They’re selling to humans. And humans are complicated.

Founders focused on AI/automation focus almost exclusively on functional needs. But we don’t use/buy things exclusively to solve functional problems. We are heavily impacted by social and emotional influences that affect how we perceive problems, decide on their importance, decide on their impact to us individually and identify the right solution.

Founder: “I can automate that for you and save X hours per month!” Makes sense. The question is whether your users/customers actually care.

How do you know if you’re solving a problem that actually matters?

Here’s the formula: User + Need + Insight (Deeper Need)

Deeper Need = Social & Emotional influences (the underlying “why”).

Founders that ignore the social and emotional components of a problem will lose. They miss two key things:

Truly understanding and defining the value proposition.

Honing in on the right users and understanding why they buy (or don’t); and the difference between who buys and uses their products.

These things are always important to understand. In everyone’s rush to automate everything, people are skipping steps.

In the last two weeks I’ve spoken to numerous founders that said the exact same thing, “I came across a process that seems stupid. Why are people doing it that way, when it could be automated with AI?”

Why indeed.

Let’s ask this question in another way: Why would people be hesitant to buy an AI/automation tool from you?

Fear of Something New

A lot of people are afraid of new things, including technology. AI seemed to pop out of nowhere, hit us like a freight train and take over in an instant. While ChatGPT has ~180 million users (which it acquired very quickly), we’re still in the early adopter phase for AI. It’s going to take time for legacy businesses and late adopters to jump in with both feet.

Startups often start by selling to other startups, because there’s no technology fear, but that market is limited. Meanwhile, a ton of startups are going into traditional industries with lower tech adoption touting their awesome copilots, forgetting that people are scared. These startups may be too early (missing the right market timing = ☠️).

Security

People are just wrapping their heads around the challenges with security, phishing scams, data breaches, etc. and now we’re saying, “Upload everything you’ve ever had into this system, because then it’ll know everything and help you.”

Data breaches are on the rise (1 | 2) and becoming more public. People are concerned and they should be. So it’s safe to say people may be skeptical of your ChatGPT wrapper tool with no credibility. Or the security claims that you make.

When you consider the speed at which AI tools are launching, improving and taking over more use cases, you can appreciate why people’s fears compound and escalate. It’s too late to put the genie back in the bottle.

Job Losses

Let me share a transcript from a recent conversation I was privy to:

Founder: “Hi there. I’m the founder of Acme. We have an AI copilot that automates [put in whatever process you want here], which will save you tons of time and money.”

Prospect: “That sounds interesting. Doing [that process] does take a long time.”

Founder: “I know. And it doesn’t have to. How many people on your team have to do [that process] every week?”

Prospect: “I have a team of 10 right now, and each week they’re doing [that process] at least 25% of the time.”

Founder: “Wow. We can definitely speed that up for you.”

Prospect: “Incredible! So how many people on my team do you think I can fire?”

Founder: “Um…well, is that really what you want to do? I never intended my solution to cause people to lose their jobs.”

Prospect: “Well if I don’t fire most of them, how am I really saving money? Saving time is cool, but what else would I have my team do?”

Founder: “Honestly I don’t know. I just wanted to build a cool AI tool and make millions doing it.”

Prospect: “Sorry I missed that. I was telling HR to fire the team.”

OK, I’ll admit, I was not privy to this conversation, but it’s where things are going and it’s what buyers are thinking. Yet I’ve never seen a startup use “fire your team and use our AI” as a value proposition on their website.

Usually when founders realize the value proposition might be “firing the team” they switch gears and say, “That’s not what we do. We empower the team to do more with less!” Got it, so you’ve built an empowerment tool, not a job hatchet.

But you’re replacing something, right? If you’re not replacing humans, then you’re replacing a more expensive solution? If you’re not replacing anything, maybe the problem isn’t painful enough to begin with.

Job loss is a real fear.

If you sell bottom-up (to the user), you have to be very clear that the value proposition isn’t replacing that user.

If you sell top-town (to the enterprise executives), you have to be very clear how the time and money they’ll save isn’t 100% correlated to firing people (which isn’t a great look) but still is valuable.

Will job losses really happen?

A number of economic reports claim that AI is going to kill jobs. Others don’t think so. They suggest that productivity increases will allow people to do more high-value work, which will subsequently increase a company’s revenue and allow them to hire more people.

Job losses will happen in certain areas. Companies will hire less people in certain roles. But new jobs will emerge. Companies will increase productivity and use that to grow (requiring more people). So we’ll see some good (job creation) and some bad (job losses).

Aaron Levie, founder at Box just wrote an epic tweet about this arguing against job losses:

I’d encourage you to click through and read the whole thing.

What’s important to remember is not whether jobs are killed or not, but how customers think it will play out and how they feel about it.

Budgets and Power

In big companies, whoever has the biggest budget usually has the most power. It’s why small innovation teams or labs that are sequestered away from the core business (to give them the space to innovate/work differently) often get squished.

If you’ve ever worked at a big company (or sold into one) you might have heard the phrase, “Use it or lose it.” They’re referring to their budgets. If you’re allocated $100 and you only spend $80, how can you ask for $100 the next year, or $120?

Companies preach financial prudence, but power isn’t allocated to people with small teams and small budgets. “What’s your headcount? How big is your team?” These are common questions (even for startups). I find these questions silly—I’d rather have fewer people super optimized to do a ton of work—but most of the world doesn’t actually work that way. So your fancy new AI tool that will automate away half of a corporate executive’s team may not sell as quickly as you thought. It may cause corporate-wide panic.

Politics (in organizations) is a real thing. How companies are run, how budgets are allocated and power is distributed (or taken) all matters. This is why it’s critical to understand the social and emotional elements of the problem you’re trying to solve. Ignore all of this at your own risk.

People’s Self Worth

Imagine telling someone that they’re no longer needed because an AI is taking over. Not only has that person lost their job and their income, but also (a portion of) their self-worth. People’s self-worth isn’t tied exclusively to their jobs, but you can’t separate the two. (There’s plenty out there that says you shouldn’t tie your self-worth to your job, but people do.) Even if people know their jobs are repetitive and boring—the type of thing AI is perfectly suited for—it doesn’t mean those people don’t care. While you’re stumbling over yourself in an over-zealous attempt to automate everything, think about the humans.

Throat to Choke & Trust

If AI/automation takes over everything, who is left to blame? The prompt engineer? The AI itself? Will people yell at their AI copilots? (Probably. 😞)

People like blaming other people, so they don’t have to blame themselves. I know that’s a pessimistic view, but humans are whacky like that. Even if we don’t eliminate all the humans, once we’re utterly dependent on our AI copilots for everything, the humans just there to push a few buttons and make sure there’s enough compute power and electricity. You can’t really blame the humans at that point, because they’re not running anything or making any decisions.

Let’s flip this around and talk about trust.

Humans like having other humans do specialized jobs for them. Despite the frustration we may feel working with lawyers, accountants, recruiters, real estate agents, etc. we still use them a lot. We like to outsource things to other people so we don’t have to do the work (in some cases we can’t do the work, in other cases we don’t want to spend the time). My dad calls this “the division of labor.” He does what he’s good at and outsources the rest.

There’s something comforting about knowing that someone with expertise is doing a job for you. We trust people to do the job (until they break our trust).

A lot of founders will argue that these middle-people can be eliminated with AI. It’s the same argument we’ve heard for the last ~10 years with marketplaces. Countless startups have built marketplaces in an attempt to rid us of pesky middle-people. As far as I can tell, most of the middle-people are doing just fine. I haven’t seen the complete demise of recruiters, lawyers, accountants or real estate agents. Marketplaces may eat up a portion of the market (or grow the market, which is awesome!), but there’ll be plenty of customers for service businesses. A lot of these middle-people service businesses use the marketplaces that were meant to disrupt them in the first place.

Tech-Enabled Services is the Key

I’ve always believed that middle-people aren’t completely eliminated, but the winners become tech enabled. After getting my ass handed to me building a startup in the recruitment space, I realized that the best business in recruiting would be a tech-enabled recruiter. With tech a recruiter could do more with less and build a very strong business.

I recently read an awesome post by Jake Saper and Jessica Cohen at Emergence Capital: The Death of Deloitte: AI-Enabled Services Are Opening a Whole New Market

The basic hypothesis is that we will see an emergence of hybrid companies - part AI-enabled software, part people - that will win in many markets. It’s early days, but I love this approach and 100% see how it can grow.

Here’s another great post with a similar thesis: AI is coming for your (services) job.

Getting all the Data

In my experience, as soon as a company hits ~50 people, silos emerge. Imagine a 10,000 person company, or 100,000. There will be almost as many silos as employees.

AI startups sell the promise of faster, better decision making, along with accurately automating things. The only challenge is they need a lot of data. Many AI startups will use an existing LLM to start, but then feed it more specific, proprietary data that allows them to focus on a specific vertical or market. Still, getting the data is insanely hard, and I think a lot of founders underestimate this challenge.

Big companies will struggle with the idea of breaking down their silos. Most won’t get there. It’s too hard, too scary and politically fraught.

Your AI tool, using limited data sets, will probably still be better than humans in making decisions and doing work, but the humans in charge won’t believe it or care. As soon as your solution gives a half-baked / mediocre answer, they’ll revert to how they’ve always done things: thousands of emails, meetings and silos. Efficient? No. Comfortable? Yes.

The Power of Good Enough

A common thread through many of these issues is the notion of “good enough.” In many cases, where you see egregious waste and nonsensical ways of doing things, customers will say, “Meh. I get it, this isn’t ideal, but we know how to do it and we get the job done.”

Founder: “You mean you’re happy with mediocrity and the way things are?”

Prospect: “Well that’s an insulting way of putting it, but pretty much. We have ten thousand fires burning, three million priorities and budget season is approaching. I can’t deal with this automation shit right now.”

Founder: “F**k…”

It Comes Down to Understanding Your User & Buyer

It’s never been more important to understand your user / buyer intimately. We’re seeing an explosion of AI copilot/automation tools and it’s not going to stop. Founders will attempt to automate every process in every industry, whether they understand it or not. It’ll be nigh impossible for users / buyers to differentiate between options, and it’ll only to get worse.

Finding inefficient processes is easy—they’re everywhere.

Finding inefficient processes that users / customers want solved and will pay for (despite all the challenges I’ve described above) is a whole other story.

Founders: If you don’t get the difference, you’re doomed.

1. Saving Time isn’t a Strong Value Proposition

I’m not sure there’s ever been a single software product that said, “Use us and things will take longer!”

People don’t buy time savings, because it’s hard to quantify. Frankly, people don’t know what they’d do with the time. The outcome of saving time is unclear and that’s what you have to nail.

Read this: Time savings is NOT your value prop by Kyle Poyar

It’s an awesome, on-the-nose post about time saving. He brings in a CFO’s perspective, including OnlyCFO (great Substack!), who says, “Sorry, but time savings doesn’t make your software special.”

So true, but founders still don’t get this.

2. Point Solutions Will Struggle

We’re seeing a new unbundling of software happening as AI startups build new point solutions. I’ve always been a fan of starting small, going niche, and figuring out the right MVP. But it’s tough because all of the incumbents in your space are integrating AI into their solutions.

Even if their AI features aren’t as great as your AI-native/built from the ground up solution, many buyers won’t care. They’re ticking a box off a checklist (“has AI capabilities”) because their boss has told them to “go figure out this AI thing!”

A lot of AI tools today look like features. I expect those that gain traction will build out more capabilities that we’ve seen in “traditional SaaS products” so they can eat more of the use cases / customer journey. Or they’ll figure out how to integrate with everything a customer uses and hope that customers prefer an “all-in-one integrated AI solution” versus “AI capabilities in each software tool.” TBD.

3. Business Models Are Changing Fast

AI will change business models faster than ever before. If you’re eliminating the need for seats, seat-based pricing becomes challenging. AI solutions lend themselves to a usage-based business model. But the model is relatively new, and customers aren’t completely comfortable with it. This is a double whammy—AI startups are now innovating how things get done (the product) and the business model at the same time. More change = more risk and fear, but potentially more opportunity as well.

Read: Usage-based Pricing by OpenView

“AI takes automation a step further, eventually eliminating the need for whole teams of people for ongoing tasks. Monetization can no longer be tied only to human users of a product.”

You may not completely believe this, but change is afoot.

As automation tools become commonplace they eliminate the need for usage (by people) at all. If the solution is doing all the work, then no one actually uses the software; the software’s usage is triggered by users (perhaps), or integrations with other systems. I recently wrote about this: When Product Usage is Actually a Bad Thing.

AI isn’t simply shifting business models, it also has the potential to increase market sizes and improve margins. By lowering costs, AI tools can become more accessible to companies with smaller budgets (in turn driving market expansion) and lead to higher profitability. Automation reduces the need for more humans (although I’ve described why this will be challenging above), which further lowers costs, but has the potential to increase demand (driving the need for more humans, leveraging more sophisticated AI tools). I like how Peter Flint and Anna Pinol at NFX describe the merging of AI and the labor workforce:

“We do expect some roles to disappear as this fusion accelerates. But AI is likely to create a form of Jevons paradox in the long run – where increased efficiency leads to a short term reduction in a resource, but a long-term surge in demand that creates more resource use.

Applied here, that means we expect to see more demand for these types of services in the future, providing more job opportunities. To aid with that, AI also provides the opportunity for rapid workforce re-skilling, allowing people to shift careers and align themselves with those opportunities.”

4. Prioritizing Pain Points Still Matters

Just because you can solve something doesn’t mean anyone will care or pay for the solution. I’m concerned that founders are forgetting this in a rush to “automate all the things.”

While AI/automation can “fix” a lot of problems, people still prioritize their most painful issues. I’ve often said, “People don’t pay for their fifth problem to be solved.” The point is that founders still need to identify the most painful / mission critical challenges faced by users/buyers. Fixing a minor annoyance isn’t going to resonate (even if the tech is super cool).

I see a lot of founders—emboldened by AI’s capabilities and its rapid improvements—tackling problems that just don’t matter.

AI doesn’t change the notion that “if you build it, they will come” is still stupid.

AI doesn’t change the notion that you should “fall in love with the problem, not the solution” — but a lot of tech founders (in particular) are forgetting this.

I’m a big believer in the potential of AI to create incredible value. We’re seeing a technological revolution that will solve problems people couldn’t fix easily or cheaply before. New use cases will emerge. AI will touch every industry, and it’s already doing so quite quickly. But the fundamentals still matter. Founders need to find real, painful problems, understand their customers deeply (beyond functional needs) and validate the business model. Otherwise you’re just building cool tech. Right now that may be enough to raise capital and get buzz, but neither of those leads to success without focusing on what actually matters.